Qwen 3.5: Complete Guide to Alibaba's Flagship Open-Source Model

Qwen 3.5 (also written Qwen3.5) is Alibaba Cloud's latest flagship open-source model, released on February 16, 2026. It's a 397-billion-parameter Mixture-of-Experts vision-language model with only 17B active parameters per token — meaning you get frontier-level performance at a fraction of the compute cost. It ships under the Apache 2.0 license, supports 201 languages, and handles text, images, and video natively in a single unified model. No separate VL variant needed.

Official Launch Trailer

Qwen3.5 is here.pic.twitter.com/trailer

— Qwen (@Alibaba_Qwen) February 16, 2026

In This Guide

Technical Specifications

Qwen 3.5 is a sparse Mixture-of-Experts (MoE) model that activates only a small fraction of its total parameters for each token. This design gives it the quality of a much larger dense model while keeping inference costs low. Here's the complete spec sheet:

| Specification | Value |

|---|---|

| Total parameters | 397 billion |

| Active parameters per token | 17 billion |

| Architecture | Hybrid Gated DeltaNet + Gated Attention + MoE |

| Number of layers | 60 |

| MoE configuration | 512 total experts, 10 routed + 1 shared active |

| Context window (native) | 262,144 tokens |

| Context window (extended via YaRN) | 1,010,000 tokens (~1M) |

| Vocabulary size | 250,000 tokens (69% larger than Qwen 3) |

| Languages supported | 201 languages and dialects |

| Modalities — input | Text + Image + Video |

| Modalities — output | Text only |

| Thinking mode | Built-in (toggle on/off via API) |

| License | Apache 2.0 |

| HuggingFace | Qwen/Qwen3.5-397B-A17B |

| Release date | February 16, 2026 |

For context, the previous Qwen 3 flagship (Qwen3-235B-A22B) used 22B active parameters from 235B total. Qwen 3.5 nearly doubles the total parameter count while reducing active parameters to 17B — a significant efficiency improvement made possible by the new hybrid architecture.

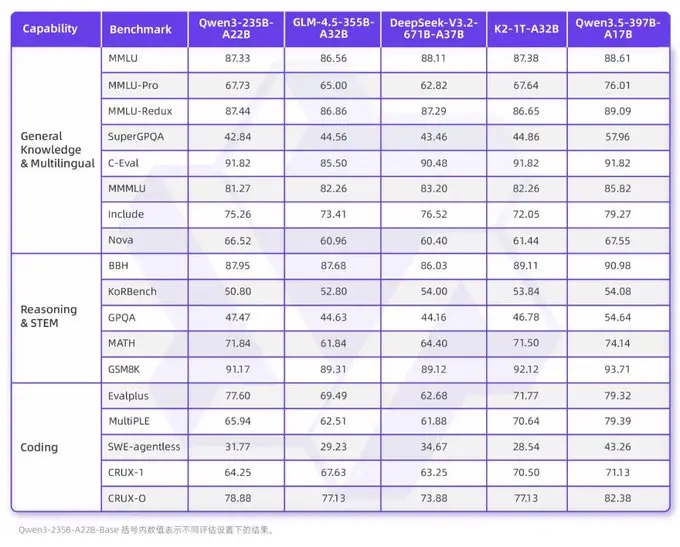

MoE Model Comparison

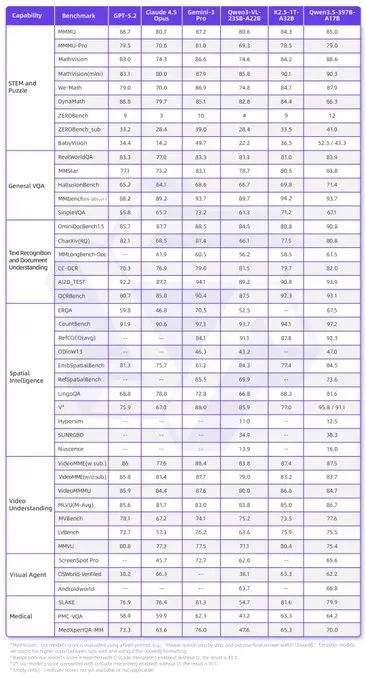

How does Qwen 3.5 compare to other large MoE models on foundational benchmarks? Here's the full picture:

Qwen 3.5 leads across most categories despite activating only 17B parameters — fewer than any competitor in this comparison.

Architecture Deep Dive

Qwen 3.5 introduces a hybrid architecture that combines two different attention mechanisms. This is one of the most technically interesting aspects of the model and directly explains its efficiency gains.

Gated DeltaNet (Linear Attention)

The majority of layers use Gated DeltaNet, a linear attention mechanism originally explored in the Qwen 3 Next experimental family. Unlike standard quadratic attention (where compute scales with the square of context length), linear attention scales linearly. This means Qwen 3.5 can process long contexts — up to 1M tokens — without the memory explosion that plagues traditional transformers.

In practical terms: longer prompts consume significantly less GPU memory than they would with a standard attention model of equivalent quality.

Gated Attention (Standard)

A subset of layers still uses traditional gated attention for tasks that benefit from full quadratic attention. The model learns when to use each mechanism, creating a best-of-both-worlds approach: efficient processing for most tokens, with full-power attention where it matters most.

Sparse Mixture of Experts

On top of the hybrid attention, Qwen 3.5 uses an MoE architecture with 512 total experts. For each token, only 11 experts are activated (10 routed + 1 shared), keeping inference fast. The result: the model has access to 397B parameters of knowledge while only running 17B parameters' worth of compute per token.

Unified Vision-Language Model

Previous Qwen vision models required a separate VL (Vision-Language) variant. Qwen 3.5 merges everything into a single checkpoint. Vision capabilities are built in through early fusion — not bolted on as an adapter. This means the same model that writes code, answers questions, and reasons through math can also analyze images and watch videos. For image generation rather than understanding, see Qwen-Image-2.0 — Alibaba's dedicated 7B text-to-image and editing model.

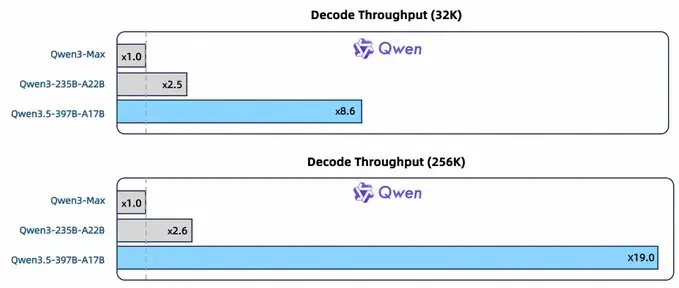

Inference Speed: Decode Throughput

The hybrid architecture pays off massively in inference speed. Thanks to Gated DeltaNet's linear scaling, Qwen 3.5 is dramatically faster than previous Qwen models — especially at long contexts:

Qwen 3.5 decode throughput: 8.6× faster at 32K and 19× faster at 256K context vs Qwen3-Max.

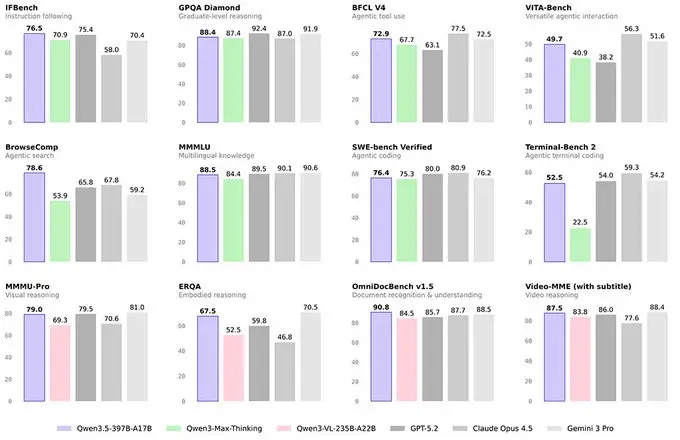

Benchmarks vs GPT-5.2, Claude Opus 4.5 & Gemini 3 Pro

Qwen 3.5 was evaluated against the current frontier models across a wide range of benchmarks. The results show it's competitive across the board, with particular strengths in instruction following, multimodal document understanding, and agentic tasks.

| Benchmark | Category | Qwen3.5 | GPT-5.2 | Claude Opus 4.5 | Gemini 3 Pro |

|---|---|---|---|---|---|

| MMLU-Pro | Knowledge | 87.8 | 87.4 | 89.5 | 89.8 |

| GPQA | Doctoral Science | 88.4 | 92.4 | 87.0 | 91.9 |

| IFBench | Instruction Following | 76.5 🥇 | 75.4 | 58.0 | 70.4 |

| MultiChallenge | Complex Reasoning | 67.6 🥇 | 57.9 | 54.2 | 64.2 |

| SWE-bench Verified | Coding | 76.4 | 80.0 | 80.9 | 76.2 |

| LiveCodeBench v6 | Live Coding | 83.6 | 87.7 | 84.8 | 90.7 |

| HLE | Humanity's Last Exam | 28.7 | 35.5 | 30.8 | 37.5 |

| MMMU | Multimodal Understanding | 85.0 | 86.7 | 80.7 | 87.2 |

| MathVision | Multimodal Math | 88.6 🥇 | 83.0 | 74.3 | 86.6 |

| OmniDocBench1.5 | Document Understanding | 90.8 🥇 | 85.7 | 87.7 | 88.5 |

| BrowseComp | Browser Automation | 69.0 🥇 | 65.8 | 67.8 | 59.2 |

| NOVA-63 | Agentic Tasks | 59.1 🥇 | 54.6 | 56.7 | 56.7 |

| AndroidWorld | Mobile Automation | 66.8 🥇 | — | — | — |

Visual comparison across 12 major benchmarks — Qwen 3.5 (purple) leads on instruction following, agentic tasks, and document understanding.

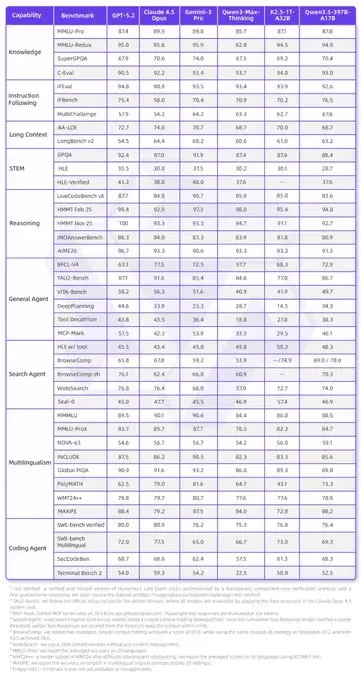

Full Benchmark Details

For a more granular view, here are the complete benchmark tables covering text-only and multimodal evaluations:

Text benchmark results — note the dominant performance on Agentic and Search Agent categories.

Multimodal benchmark results — leading scores in Visual Agent and Document Understanding categories.

Key Takeaways

- Qwen 3.5 leads in: instruction following (IFBench), multimodal math (MathVision), document understanding (OmniDocBench), and all agentic benchmarks (BrowseComp, NOVA-63, AndroidWorld).

- Claude Opus 4.5 leads in: real-world coding tasks (SWE-bench Verified at 80.9).

- Gemini 3 Pro leads in: competitive programming (LiveCodeBench), HLE, and general multimodal understanding (MMMU).

- GPT-5.2 leads in: doctoral-level science (GPQA) and abstract reasoning (ARC-AGI-2 at 54.2%).

Worth noting: Qwen 3.5 outperforms the previous closed-source Qwen3-Max-Thinking on most benchmarks — meaning an open-weight, Apache 2.0 model now exceeds what was Alibaba's best proprietary offering just months ago.

Qwen3.5 benchmarks

— Qwen (@Alibaba_Qwen) February 16, 2026

Multimodal Capabilities

Qwen 3.5 is a unified vision-language model. It processes text, images, and video through a single architecture — no need for a separate VL model. Here's what it can handle:

Image Understanding

- Document analysis: Charts, graphs, tables, PDFs, handwritten notes — scores 90.8 on OmniDocBench1.5, the best of any model tested.

- Medical imaging: Community testers report strong performance with X-rays, histopathology slides, and dermatology images — producing structured diagnoses with appropriate caveats.

- Technical diagrams: Can parse complex numerical graphs and explain them in accessible language.

- Creative tasks: SVG generation from reference photos, wireframe-to-code conversion from hand-drawn sketches.

Video Understanding

- Accepts video input up to 2 hours in length.

- Accurately describes scenes, counts animals/objects, identifies locations, and summarizes action sequences.

- Community tests with African savanna footage showed correct animal counts and accurate location guesses (Serengeti, Tanzania / Masai Mara, Kenya).

Code from Vision

One of the most practical multimodal use cases: Qwen 3.5 can take a hand-drawn wireframe and generate functional HTML/CSS/JS. Community testers built complete portfolio websites, browser-based OS interfaces (with working calculators, games, and settings panels), and even 3D simulations — all from text or image prompts.

Agentic AI Features

Alibaba positions Qwen 3.5 as a model for the "agentic AI era." This isn't just marketing — the benchmarks back it up. Qwen 3.5 leads on every agent-focused evaluation:

- BrowseComp (browser automation): 69.0 — beats GPT-5.2 (65.8) and Claude Opus 4.5 (67.8).

- NOVA-63 (general agentic tasks): 59.1 — top of the field.

- AndroidWorld (mobile app automation): 66.8 — a benchmark where competitors haven't even published scores.

In practice, this means Qwen 3.5 can independently interact with mobile and desktop applications, take actions across apps, fill out forms, navigate websites, and complete multi-step workflows. Combined with its vision capabilities, it can literally see what's on screen and act on it.

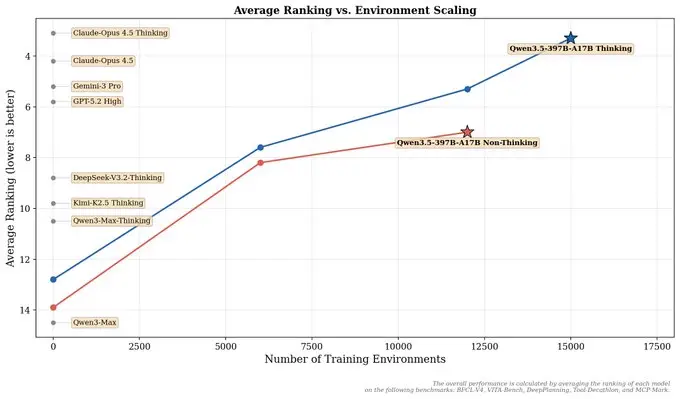

Agentic scaling: Qwen 3.5 (Thinking) achieves the best average ranking as the number of training environments increases.

Qwen3.5 visual agentic capabilities

— Qwen (@Alibaba_Qwen) February 16, 2026

Tool Use & Function Calling

Qwen 3.5 supports native function calling, structured output (JSON mode), and tool use. The API is OpenAI-compatible, making it a drop-in replacement in many existing workflows. It also powers Qwen Code, Alibaba's developer CLI for delegating coding tasks via natural language.

Introducing Qwen Code

— Qwen (@Alibaba_Qwen) February 16, 2026

API Pricing

Qwen 3.5 is available through Alibaba Cloud's Model Studio as Qwen3.5-Plus. The pricing is aggressive — significantly cheaper than competing frontier models:

| Context Range | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| 0–256K tokens | $0.40 | $2.40 |

| 256K–1M tokens | $1.20 | $7.20 |

- Batch mode: 50% discount for asynchronous batch processing.

- Thinking mode: Same price regardless of whether thinking is enabled or disabled — no separate thinking surcharge.

- Model ID:

qwen3.5-plus-2026-02-15

For reference, this pricing is roughly 70% cheaper than GPT-5 series API calls for comparable tasks, and about 60% cheaper than previous Qwen models. In the Chinese market, pricing starts as low as ¥0.8 per million tokens — approximately 1/18th the cost of Gemini 3 Pro.

Qwen 3.5 is also available for free testing on chat.qwen.ai with rate limits.

Running Locally

With 397B total parameters, Qwen 3.5 is a large model — but the MoE architecture means RAM requirements are based on total parameters (all experts must be loaded), not just active parameters. Here's what you need:

Hardware Requirements

| Quantization | Approx. Size | Minimum RAM/VRAM | Recommended Hardware |

|---|---|---|---|

| BF16 (full precision) | ~780 GB | 800+ GB | Multi-GPU server (4–8× A100 80GB) |

| Q8_0 | ~400 GB | 420+ GB | Mac Studio 512GB / Multi-GPU |

| Q4_K_XL | ~220 GB | 240+ GB | Mac Studio 256GB / 2× RTX 5090 |

| Q2_K_XL | ~140 GB | 160+ GB | Mac Studio 192GB (slow) |

Important: the 128GB unified memory Macs (M4 Max, etc.) are not enough to run Qwen 3.5, even at aggressive quantization levels. You need at least 256GB of unified memory, which means a Mac Studio or Mac Pro. For more details on hardware options, see our hardware requirements guide.

Supported Frameworks

- HuggingFace Transformers — requires latest version

- vLLM ≥ 0.8.5

- SGLang ≥ 0.4.6.post1

- Ollama — via GGUF format

- LM Studio — via GGUF format

- llama.cpp — via GGUF format

GGUF quantizations are available from Unsloth on HuggingFace in formats ranging from Q2_K to Q8_0. For a complete local deployment walkthrough, check our Run Qwen Locally guide.

API & Developer Guide

The Qwen 3.5 API is OpenAI-compatible, which means you can use existing OpenAI SDK clients by simply changing the base URL and API key. Here's a quick start:

Python (OpenAI SDK)

from openai import OpenAI

client = OpenAI(

api_key="YOUR_DASHSCOPE_API_KEY",

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

)

response = client.chat.completions.create(

model="qwen3.5-plus-2026-02-15",

messages=[

{"role": "user", "content": "Explain quantum entanglement simply."}

],

extra_body={"enable_thinking": True} # Toggle thinking mode

)

print(response.choices[0].message.content)

Multimodal (Image Input)

response = client.chat.completions.create(

model="qwen3.5-plus-2026-02-15",

messages=[{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": "https://example.com/chart.png"}},

{"type": "text", "text": "Explain this chart in simple words."}

]

}]

)

Recommended Sampling Parameters

| Mode | Temperature | Top-P | Top-K | Presence Penalty |

|---|---|---|---|---|

| Thinking mode | 0.6 | 0.95 | 20 | 0.0 |

| Standard mode | 0.7 | 0.8 | 20 | 1.5 |

Recommended max output tokens: 32,768 for general queries; up to 81,920 for complex math/coding tasks. The API also supports structured output (JSON mode) and function calling for tool-use workflows.

Community Testing Highlights

Within hours of launch, the community began stress-testing Qwen 3.5 across creative, technical, and practical tasks. Here are the standout results:

Coding & Creative Generation

- Browser OS: Generated a fully interactive browser-based operating system with working calculator, snake game, memory game, settings panel with wallpaper customization, and a Matrix-style screensaver — from a single prompt.

- 3D Games: Created functional 3D games (flight combat simulator, naval combat, jet ski simulator, Cube Runner 3D) in JavaScript without external libraries — using raw math only.

- SVG from Photos: Converted real photographs into detailed SVG replicas, capturing colors, shadows, and composition with notably higher fidelity than previous open-source models.

- Wireframe to Code: Turned hand-drawn portfolio wireframes into fully functional, styled portfolio websites with iterative improvement (the second attempt was described as significantly better than the first).

Vision & Medical Reasoning

- Graph Analysis: Given a complex numerical chart, produced an explanation that was described as "a masterclass in making complex numerical analysis accessible" — breaking down axes, curves, and key takeaways with concrete examples.

- Medical Imaging: When given X-rays, histopathology, and dermatology images in a single prompt, produced a structured clinical assessment bridging multiple specialties — radiology, pathology, and lab interpretation — into a coherent diagnostic picture, plus a compassionate draft patient email.

- Video Analysis: Accurately described an African savanna scene from video, correctly counting animals, identifying species (lions, zebras, deer), and guessing the filming location as Serengeti/Masai Mara.

Creative Writing

- Given an AI-generated image of two people in a room, produced a detailed romance novel outline with character backstories, motivations tied to visual cues in the image, a three-act structure, and even context for the specific cover image moment. Then successfully pivoted to a horror/true-crime version on request.

Testers consistently noted that Qwen 3.5's language quality has improved significantly — responses are more targeted, less embellished, and show better structural organization compared to previous versions.

Qwen ecosystem and community

— Qwen (@Alibaba_Qwen) February 16, 2026

Frequently Asked Questions

Is Qwen 3.5 Plus a different model from Qwen 3.5?

No. Qwen3.5-Plus is simply the hosted API version of the open-weight Qwen3.5-397B-A17B model, available through Alibaba Cloud's Model Studio. It comes with production features like a default 1M context window and built-in tool integration. The underlying model weights are the same.

Can I run Qwen 3.5 on my Mac?

Only if you have a Mac Studio or Mac Pro with at least 256GB unified memory. The 128GB M4 Max systems are too small, even with aggressive quantization. The Q4_K_XL quantization needs ~220GB of storage plus ~240GB of RAM. See our hardware requirements page for details.

How does Qwen 3.5 compare to Qwen 3?

Qwen 3.5 brings three major upgrades: (1) a hybrid attention architecture that dramatically reduces memory usage at long contexts, (2) unified multimodal capabilities — no separate VL model needed, and (3) a 69% larger vocabulary (250K vs 148K tokens) enabling better multilingual performance across 201 languages. It also outperforms the closed-source Qwen3-Max-Thinking on most benchmarks. See our full Qwen 3 guide for the previous generation's specs.

Is fine-tuning available?

Fine-tuning is not yet available through Alibaba Cloud's Model Studio as of launch. However, since the model is Apache 2.0 and weights are on HuggingFace, community fine-tuning with tools like LoRA/QLoRA is possible for those with sufficient hardware.

What's the thinking mode?

Like QwQ and other reasoning models, Qwen 3.5 can output its internal reasoning process in <think>...</think> tags before giving a final answer. This is enabled by default and can be toggled off via the API parameter enable_thinking: false. Both modes cost the same — no pricing premium for thinking.

What languages does it support?

Qwen 3.5 supports 201 languages and dialects, up from 119 in Qwen 3. The expanded 250K-token vocabulary enables 10–60% cost reduction for multilingual applications through more efficient tokenization.

Bottom Line

Qwen 3.5 is a genuinely competitive frontier model that's free to use, free to deploy, and free to modify. It leads on agentic benchmarks, matches or exceeds GPT-5.2 on instruction following and document understanding, and brings unified vision-language capabilities to the open-source world for the first time at this quality level. The aggressive API pricing makes it viable for production workloads, and the Apache 2.0 license means you can self-host without restrictions.

If you're building AI agents, processing documents at scale, or need a capable multimodal model without vendor lock-in — Qwen 3.5 should be at the top of your evaluation list.