Qwen-Image-2.0: AI Image Generation & Editing

Qwen-Image-2.0 is Alibaba's unified image generation and editing model — a 7-billion-parameter powerhouse that creates native 2K images, renders multilingual text with near-perfect accuracy, and handles both generation and editing in a single architecture. Released on February 10, 2026, it replaces the previous 20B-parameter Qwen-Image series with a model that's 3× smaller yet significantly more capable. Whether you need photorealistic product shots, professional infographics with precise typography, or complex image editing — Qwen-Image-2.0 does it all in one model.

Navigate this guide:

- What Is Qwen-Image-2.0?

- Technical Specs & Architecture

- 5 Core Capabilities

- Official Launch Video

- Benchmarks & Rankings

- Qwen-Image-2.0 vs GPT-Image-1.5 vs FLUX.2 vs Nano Banana

- Text Rendering: The Killer Feature

- API Access & Pricing

- Running Locally & Hardware Requirements

- Quick-Start Code Examples

- Version History & Evolution

- Ecosystem & Community

- FAQ

What Is Qwen-Image-2.0?

Qwen-Image-2.0 is the next generation of Alibaba's text-to-image foundation model. Unlike its predecessor — which required separate models for generation (Qwen-Image) and editing (Qwen-Image-Edit) — version 2.0 unifies both tasks into a single 7B-parameter model. This means improvements to generation quality automatically enhance editing capabilities too, since both share the same weights.

The model is built on an MMDiT (Multimodal Diffusion Transformer) architecture, using an 8B Qwen3-VL encoder to understand text prompts and input images, feeding into a 7B diffusion decoder that generates output at up to 2048×2048 native resolution. It supports prompts up to 1,000 tokens long — enough for detailed creative direction including multi-paragraph infographic layouts, comic scripts, or complex editing instructions.

Technical Specs & Architecture

| Specification | Qwen-Image-2.0 | Qwen-Image v1 |

|---|---|---|

| Parameters | 7B | 20B |

| Architecture | MMDiT (Unified Gen+Edit) | MMDiT (Separate models) |

| Encoder | 8B Qwen3-VL | — |

| Decoder | 7B Diffusion | 20B Diffusion |

| Max Resolution | 2048×2048 (native 2K) | ~1328×1328 |

| Max Prompt Length | 1,000 tokens | ~256 tokens |

| Generation + Editing | Unified (one model) | Separate models required |

| Text Rendering | Professional-grade bilingual | Good (English-focused) |

| Inference Speed | 5–8 seconds | 10–15 seconds |

| License | Apache 2.0 (expected) | Apache 2.0 |

The architecture pipeline flows as: Text/Image Input → 8B Qwen3-VL Encoder → 7B Diffusion Decoder → 2048×2048 Output. The Qwen3-VL encoder handles both text understanding and image comprehension, which is what enables the unified generation-and-editing capability — the model "sees" existing images and understands editing instructions in the same way it processes generation prompts.

5 Core Capabilities

Alibaba highlights five major breakthroughs in Qwen-Image-2.0 compared to the previous generation:

1. Unified Generation & Editing

No more switching between models. Qwen-Image-2.0 handles text-to-image generation, single-image editing, multi-image compositing, background replacement, and style transfer — all in one model call. Since both tasks share weights, improvements compound: better generation automatically means better editing.

2. Native 2K Resolution

Most AI image generators create at 1024×1024 and then upscale. Qwen-Image-2.0 generates natively at 2048×2048, which means fine details — skin pores, fabric weave, architectural textures — are actually rendered during generation, not interpolated after the fact. The model supports multiple aspect ratios including 1:1, 16:9, 9:16, 4:3, 3:4, 3:2, and 2:3.

3. 1,000-Token Prompt Support

Where most image models cap prompts at ~77 tokens (SDXL) or ~256 tokens, Qwen-Image-2.0 accepts up to 1,000 tokens. This enables direct generation of complex layouts like multi-slide presentations, detailed infographics, multi-panel comics, and posters with extensive text — all from a single prompt.

4. Professional Typography Rendering

The model renders text on images with near-perfect accuracy across English, Chinese, and mixed-language content. It handles multiple calligraphy styles (including Emperor Huizong's "Slender Gold Script"), generates presentation slides with accurate timelines, and places text correctly on varied surfaces — glass whiteboards, clothing, magazine covers — respecting lighting, reflections, and perspective.

5. Photorealistic Visual Quality

Qwen-Image-2.0 produces extreme detail differentiation. In test scenes, the model distinguishes over 23 shades of green with distinct textures — from waxy leaf surfaces to velvety moss cushions. It handles photorealistic, anime, watercolor, and hand-drawn artistic styles with equal competence.

Generated by Qwen-Image-2.0 — note the differentiation between waxy leaves, velvety moss, and translucent foliage.

Sample Generations Gallery

Here are more examples of Qwen-Image-2.0's output quality across different styles and subjects:

Official Launch Video

The Qwen team published this showcase video highlighting Qwen-Image-2.0's generation quality, text rendering, and editing capabilities:

— Qwen (@Alibaba_Qwen) February 10, 2026

Benchmarks & Rankings

Qwen-Image-2.0 performs competitively against the best closed-source image models despite being significantly smaller and open-weight.

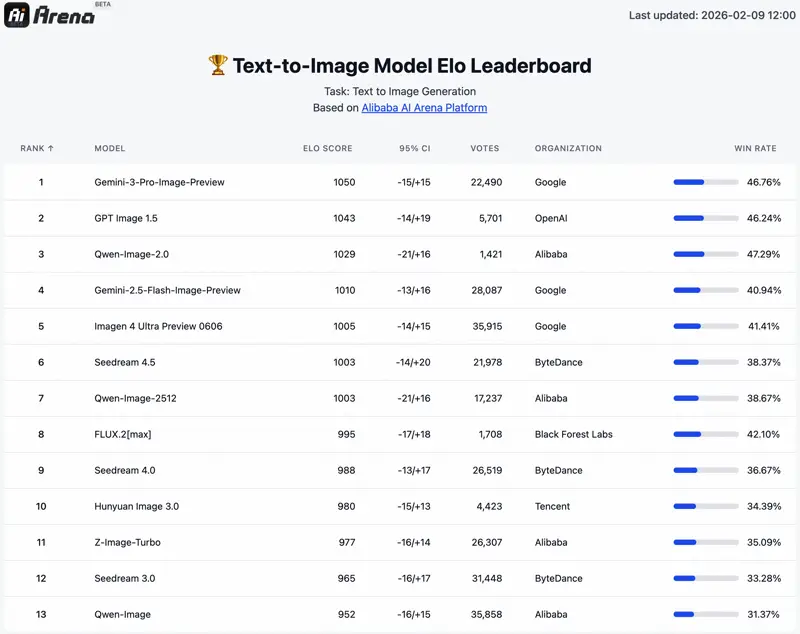

AI Arena Rankings (Blind Human Evaluation)

The AI Arena leaderboard uses an ELO rating system based on blind head-to-head comparisons where judges don't know which model produced which image:

AI Arena Text-to-Image Leaderboard — Qwen-Image-2.0 at #3 (ELO 1029, 47.29% win rate).

| Task | Qwen-Image-2.0 Rank | Ahead Of | Behind |

|---|---|---|---|

| Text-to-Image | #3 (ELO 1029) | Gemini-2.5-Flash, Imagen 4, Seedream 4.5, FLUX.2 | Gemini-3-Pro (1050), GPT-Image-1.5 (1043) |

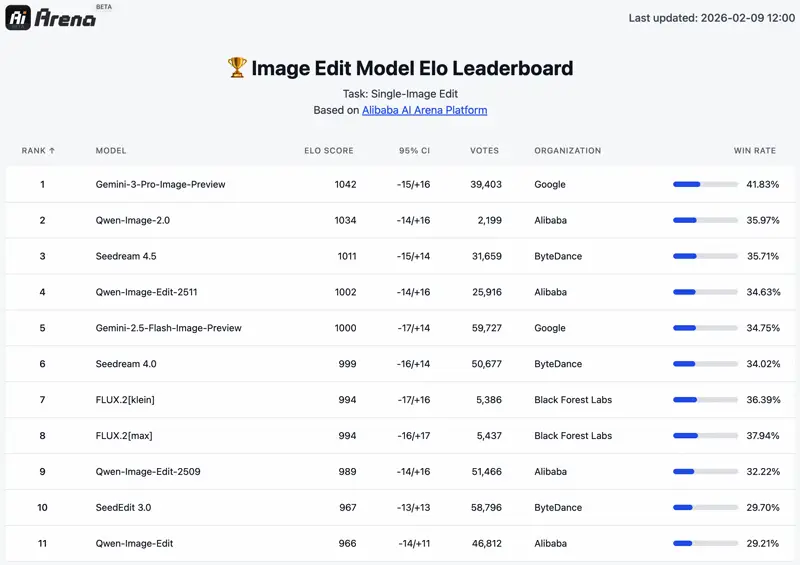

| Image Editing | #2 (ELO 1034) | Seedream 4.5, Qwen-Image-Edit-2511, FLUX.2 | Gemini-3-Pro (1042) |

AI Arena Image Edit Leaderboard — Qwen-Image-2.0 at #2 (ELO 1034, 35.97% win rate).

Automated Benchmark Scores

| Benchmark | Qwen-Image-2.0 | GPT-Image-1 | FLUX.1 (12B) |

|---|---|---|---|

| GenEval | 0.91 | — | — |

| DPG-Bench | 88.32 | 85.15 | 83.84 |

On DPG-Bench (which measures prompt-following fidelity), Qwen-Image-2.0 outperforms GPT-Image-1 by +3.17 points and FLUX.1 by +4.48 points. The model is also rated as the strongest open-source image model on T2I-CoreBench for composition and reasoning tasks.

Qwen-Image-2.0 vs Competitors

| Feature | Qwen-Image-2.0 | GPT-Image-1.5 | Gemini 3 Pro Image | FLUX.2 Max |

|---|---|---|---|---|

| Parameters | 7B | Undisclosed | Undisclosed | ~12B+ |

| Unified Gen+Edit | Yes | Yes | Yes | No |

| Max Resolution | 2K native | 2K+ | 2K | 2K |

| Chinese Text Quality | Excellent | Good | Good | Limited |

| Inference Speed | 5–8s | 10–15s | 5–10s | 10–20s |

| Open Weights | Yes (Apache 2.0) | No | No | Partial |

| Local Deployment | Yes (~24GB VRAM) | No | No | Yes |

| Max Prompt Length | 1,000 tokens | ~4,000 chars | ~1,000 tokens | ~256 tokens |

The standout differentiator is that Qwen-Image-2.0 is the only top-3 image model that's fully open-source. GPT-Image-1.5 and Nano Banana Pro are closed-source API-only, while FLUX.2 offers partial weights but requires separate models for editing. For organizations that need on-premise deployment, fine-tuning control, or data privacy — Qwen-Image-2.0 is currently the strongest option available.

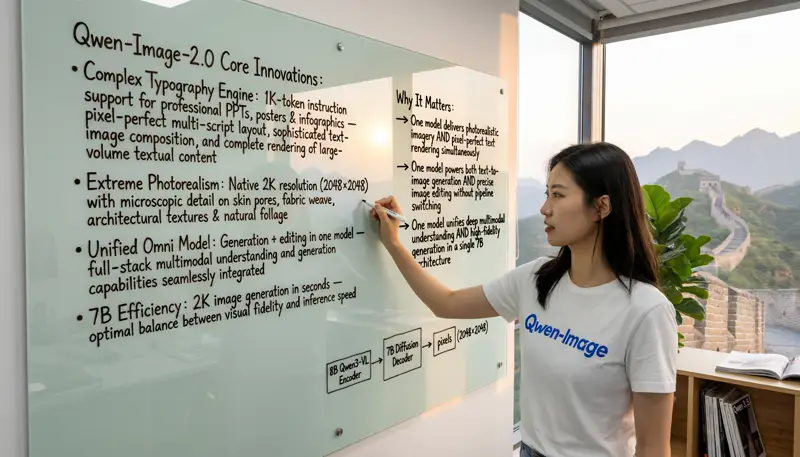

Text Rendering: The Killer Feature

If there's one area where Qwen-Image-2.0 genuinely leads the entire field, it's text rendering in generated images. The model can:

- Generate complete PowerPoint slides with accurate timelines, charts, and picture-in-picture compositions

- Render professional posters with mixed font sizes, weights, and colors — all correctly spelled

- Create multi-panel comics with consistent character design and readable speech bubbles

- Handle Chinese calligraphy including classical styles — it rendered nearly the entire "Preface to the Orchid Pavilion" with only a handful of character errors

- Place text on 3D surfaces like glass whiteboards, t-shirts, and curved bottles with correct perspective and lighting

This makes the model particularly valuable for e-commerce (product images with pricing overlays), marketing (social media graphics and banners), and content creation (infographics and presentation visuals).

AI-generated image by Qwen-Image-2.0 — note the legible text on the glass whiteboard, accurate rendering on the t-shirt, and realistic perspective throughout.

API Access & Pricing

Qwen-Image-2.0 is currently in invite-only API testing on Alibaba Cloud's DashScope (BaiLian) platform. A free demo is available on Qwen Chat for anyone to try.

Current Pricing (DashScope & Third-Party)

| Provider | Model | Price per Image |

|---|---|---|

| Alibaba Cloud DashScope | qwen-image-max | ¥0.50 (~$0.07) |

| Alibaba Cloud DashScope | qwen-image-plus | ¥0.20 (~$0.03) |

| Replicate | Qwen-Image | $0.030 |

| Fal.ai | Qwen-Image-Edit | $0.021 |

Note: These prices reflect the v1 series models currently available via API. Qwen-Image-2.0 specific pricing hasn't been finalized yet. Given the smaller model size (7B vs 20B), prices are expected to be competitive or lower.

Running Locally & Hardware Requirements

The open-source weights for Qwen-Image-2.0 are expected to be released under Apache 2.0 approximately one month after launch (based on Alibaba's pattern with previous Qwen-Image releases). Meanwhile, the v1 models are fully available for local deployment.

Expected Hardware Requirements

| Setup | VRAM | Notes |

|---|---|---|

| Full precision (BF16) | ~24GB | RTX 4090, A6000, or similar |

| FP8 quantized | ~12–16GB | RTX 4070 Ti Super / 3090 |

| Layer-by-layer offload | 4GB VRAM | Via DiffSynth-Studio (slow but works) |

DiffSynth-Studio provides comprehensive local deployment support including low-VRAM layer-by-layer offload (as low as 4GB), FP8 quantization, and LoRA/full fine-tuning. For high-performance inference, both vLLM-Omni and SGLang-Diffusion offer day-0 support, and Qwen-Image-Lightning achieves a ~42× speedup with LightX2V acceleration.

For more details on running Qwen models locally, see our complete local deployment guide and hardware requirements breakdown.

Quick-Start Code Examples

Text-to-Image Generation

from diffusers import QwenImagePipeline

import torch

pipe = QwenImagePipeline.from_pretrained(

"Qwen/Qwen-Image-2512",

torch_dtype=torch.bfloat16

).to("cuda")

image = pipe(

prompt="A professional infographic about renewable energy, with charts and statistics, clean modern design",

negative_prompt="blurry, low quality, distorted text",

width=1664,

height=928,

num_inference_steps=50,

true_cfg_scale=4.0,

generator=torch.Generator(device="cuda").manual_seed(42)

).images[0]

image.save("output.png")Image Editing

from diffusers import QwenImageEditPlusPipeline

from PIL import Image

pipeline = QwenImageEditPlusPipeline.from_pretrained(

"Qwen/Qwen-Image-Edit-2511",

torch_dtype=torch.bfloat16

).to("cuda")

source_image = Image.open("photo.jpg")

result = pipeline(

image=[source_image],

prompt="Change the background to a sunset beach scene",

num_inference_steps=40,

true_cfg_scale=4.0,

guidance_scale=1.0

).images[0]

result.save("edited.png")

Requirements: transformers >= 4.51.3 and latest diffusers from source (pip install git+https://github.com/huggingface/diffusers).

Version History & Evolution

| Date | Model | Key Advancement |

|---|---|---|

| Aug 2025 | Qwen-Image | 20B MMDiT, strong text rendering, Apache 2.0 |

| Aug 2025 | Qwen-Image-Edit | Dedicated single-image editing model |

| Sep 2025 | Qwen-Image-Edit-2509 | Multi-image editing support |

| Dec 2025 | Qwen-Image-2512 | Enhanced detail, texture quality, realism |

| Dec 2025 | Qwen-Image-Edit-2511 | Improved consistency across edits |

| Dec 2025 | Qwen-Image-Layered | Layered image generation |

| Feb 10, 2026 | Qwen-Image-2.0 | Unified 7B, native 2K, 1000-token prompts |

The evolution shows a clear trajectory: from specialized models (separate generation and editing) toward a unified, smaller, more capable architecture. The 65% parameter reduction (20B → 7B) while improving quality across all metrics is a significant engineering achievement.

Ecosystem & Community

The Qwen-Image ecosystem has grown rapidly since its August 2025 launch:

- 7,400+ GitHub stars and 429 forks on the official repository

- 484+ LoRA adapters available on Hugging Face for custom styles and domains

- ComfyUI native support for workflow integration

- Multi-hardware support via LightX2V: NVIDIA, Hygon, Metax, Ascend, and Cambricon

- LeMiCa cache acceleration achieving ~3× lossless speedup

The prior Qwen-Image series models are already fully open-sourced under Apache 2.0, with weights available on Hugging Face and ModelScope. Community-created LoRA fine-tunes (like MajicBeauty for portrait enhancement) demonstrate the model's adaptability to specialized use cases. Qwen-Image-2.0 weights are expected to follow the same open-source path.

Frequently Asked Questions

Is Qwen-Image-2.0 free to use?

Yes — you can try it for free at chat.qwen.ai. For API access, DashScope pricing starts at ~$0.03/image for the Plus tier. The open-source weights (expected under Apache 2.0) will allow unlimited free local use once released.

Can I run Qwen-Image-2.0 on my own GPU?

Once weights are released, yes. At 7B parameters, the model fits on a 24GB GPU at full precision, or as low as 4GB VRAM using DiffSynth-Studio's layer-by-layer offload. See our hardware requirements guide for detailed recommendations.

How does it compare to Midjourney or DALL-E?

On the AI Arena blind evaluation leaderboard, Qwen-Image-2.0 ranks #3 for text-to-image and #2 for image editing. The key advantage over Midjourney and DALL-E is that it's open-source, supports local deployment, allows fine-tuning, and has significantly better multilingual text rendering.

What's the difference between Qwen-Image-2.0 and Qwen 3.5's multimodal capabilities?

Qwen 3.5 has unified vision-language understanding — it can analyze images, documents, and video. Qwen-Image-2.0 is a dedicated generation model — it creates and edits images. They're complementary: use Qwen 3.5 to understand visual content, and Qwen-Image-2.0 to generate it.

Is Qwen-Image-2.0 open source?

The previous Qwen-Image series is fully open-source under Apache 2.0. Qwen-Image-2.0's weights haven't been released yet as of February 2026, but Alibaba has consistently open-sourced their models — typically within ~1 month of launch. The Apache 2.0 license allows free commercial use, modification, and redistribution.

What happened to the separate Qwen-Image-Edit model?

It's been absorbed into Qwen-Image-2.0. The new unified architecture handles both generation and editing in one model, eliminating the need for separate specialized models.

Updated · February 2026